|

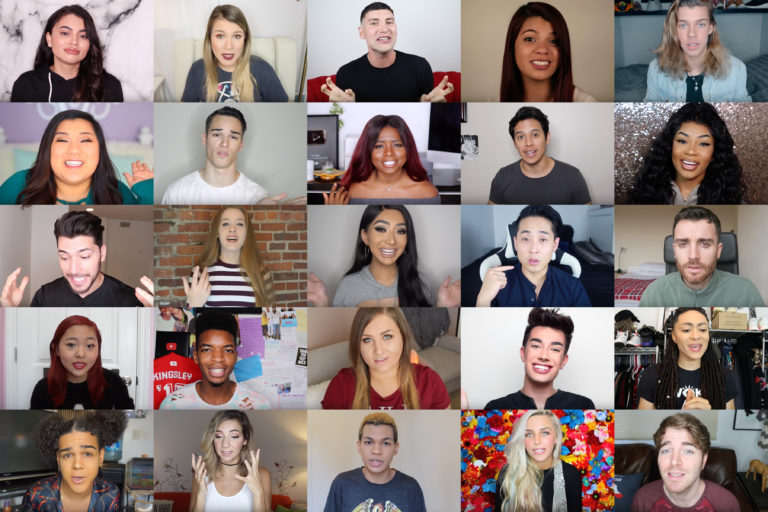

I received my PhD from University of Southern California where I was advised by Prof. Laurent Itti and Prof. Barry Boehm. I currently work with Large Multi-Modal Generative Models. I am the author of ABLATOR a distributed PyTorch framework for Machine Learning experiments. On my 'free' time I mentor MS students from USC (DeepUSC ✌️) in writing better code and doing Deep Learning research. Over my PhD I have worked and mentored 20+ students. On a previous life, I worked as a Software Engineer, Machine Learning Engineer and currently I am on Stealth-mode 🤫 |

|

|

I'm interested on improving the Generalization of Machine Learning models. My current research interests are in Machine Learning, scaling laws with LLMs for problems that involve multi-modality. I appreciate good engineering as it is important in solving problems at scale. A lot of my work is constantly in-progress. |

|

|

|

|

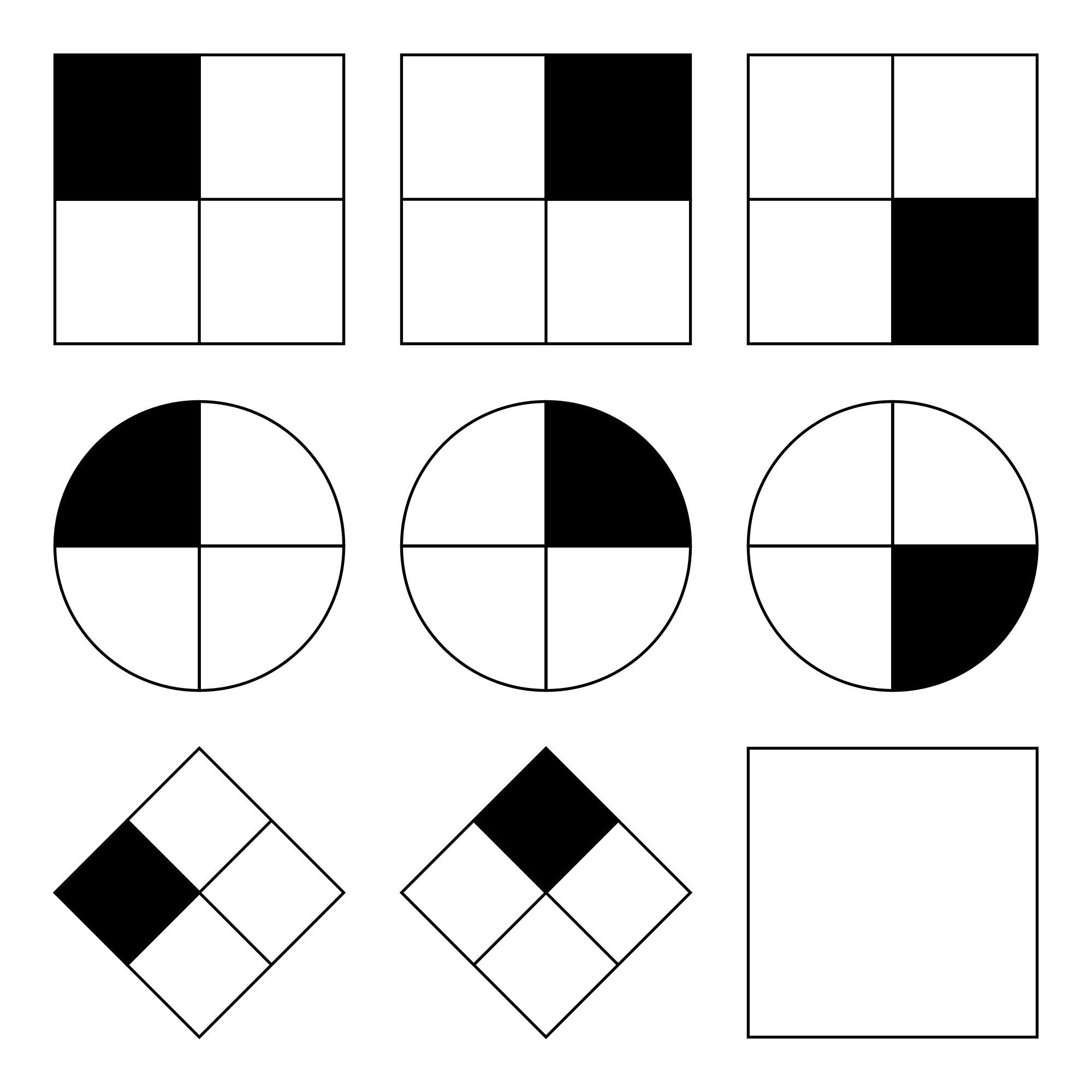

Iordanis Fostiropoulos, Laurent Itti ICJAI (oral), 2023 Workshop on Knowledge-Based Compositional Generalization project page / paper Previous work evaluate the reasoning of Language Models (LMs) using tests that can be inapplicable to a LM. In this work we propose an unbiased and challenging evaluation setting using complex Regular expressions composed of Quasi-Natural Language. We evaluate the inductive reasoning ability of a LM to generate Facts that abide by the Rules. We find that when a Rule is injected in the training sequence of a LM it learn to associate the Facts with the Rules implicitly. When the same model is probed with Inductive In-Context Learning, where the same model is first probed to generate a sound Rule, it will generate probable Facts. |

|

Iordanis Fostiropoulos, Laurent Itti AutoML, 2023 project page / paper Ablation experiments are important in improving Machine Learning (ML) models, where multiple experimental trials are required for each component of a ML model. We find lack of available tools and frameworks for horizontal scaling of ML experiments where manual effort is required that often lead to errors. We propose a statefull experiment design framework, ABLATOR, that can scale a single experiment to thousands of trials while being fault-tollerant and robust to errors. We performed the largest ablation experiment for tabular data on Transformer models to date, evaluating 2,337 models in total. Finally, we open source ABLATOR; |

|

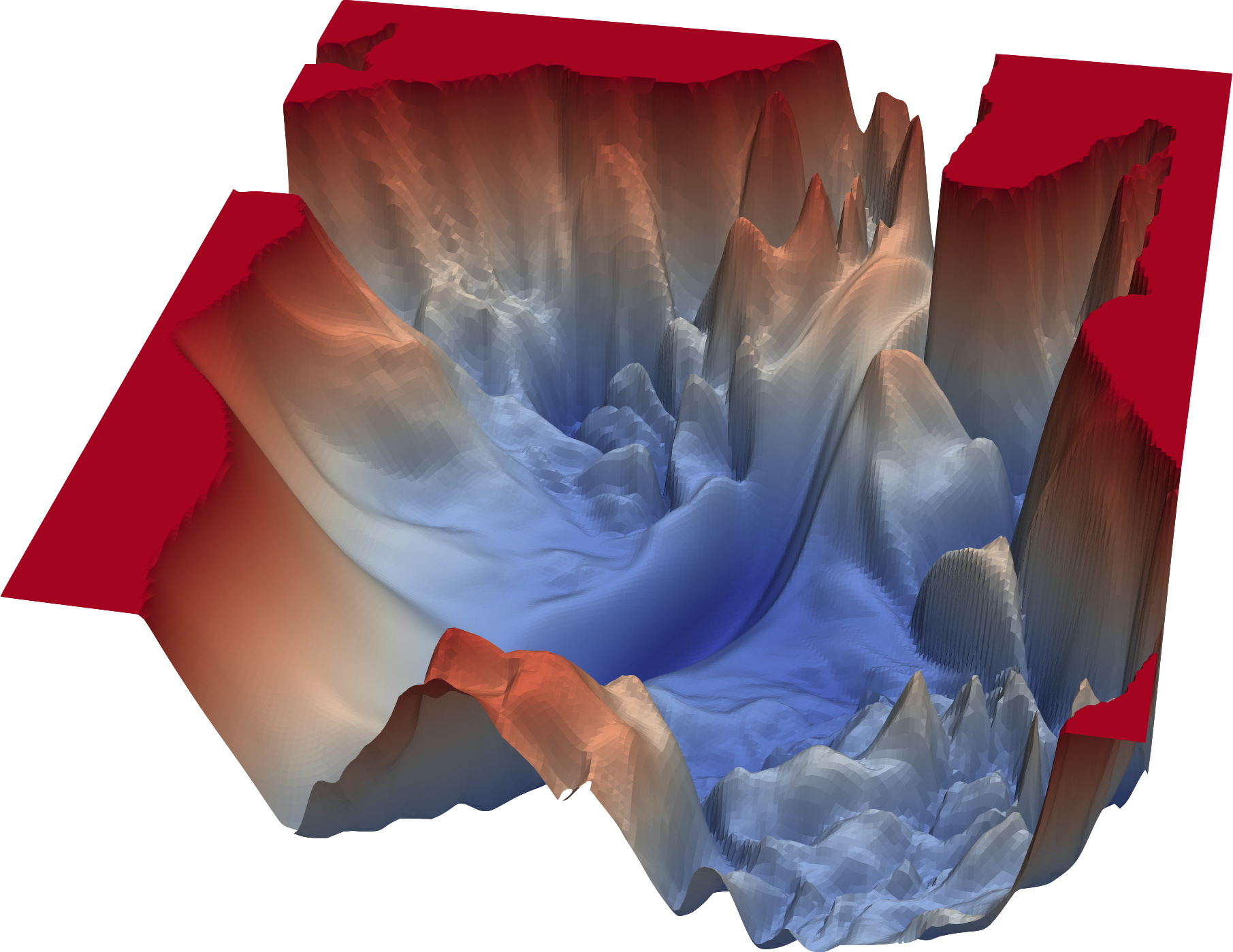

Iordanis Fostiropoulos, Bowman Brown, Laurent Itti ICLR, 2023 RTML Workshop project page / paper We find that errors in the ablation setup can lead to incorrect explanations for which method components contribute to the performance. Using the insights from our meta-analysis, we demonstrate how current practices can lead to unreliable conclusions. We quantify the selection bias of Hyperparameter Optimization (HPO) strategies to show that only random sampling can produce reliable results when determining the top and mean performance of a method under a limited computational budget. |

|

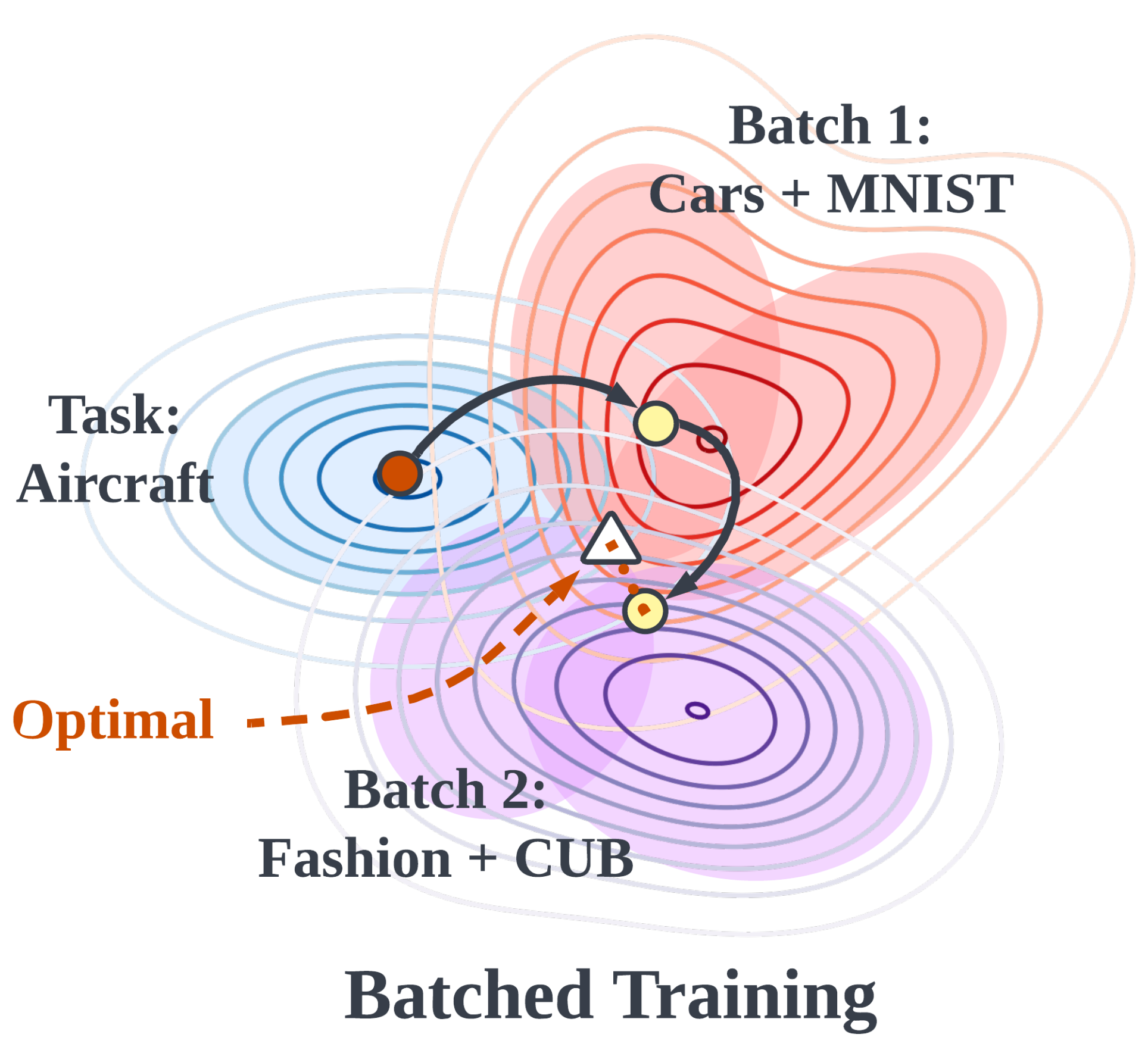

Iordanis Fostiropoulos, Jiaye Zhu, Laurent Itti CVPR, 2023 project page / paper We propose a method to incrementally learn new tasks for a 'base model' using multiple learners and in a distributed fashion. We consolidate the 'knoweldge' of several learners at larger incremental steps that lead to reduced catastrophic forgetting when compared to smaller steps. We find simpler methods to avoid catastrophic forgetting outperform current state-of-the-art in our challenging Stream Benchmark. |

|

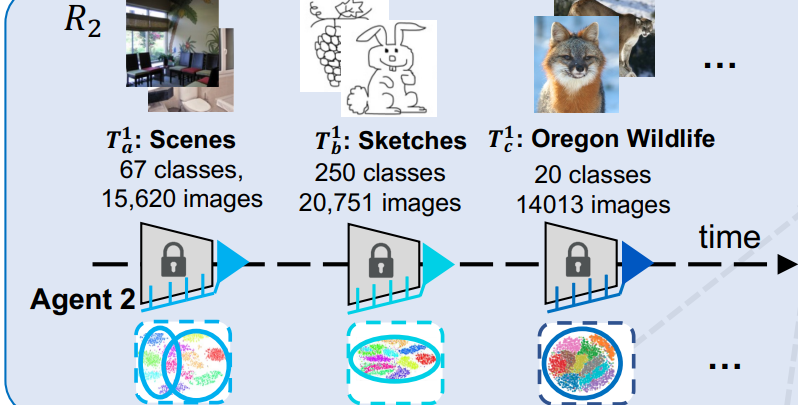

Yunhao Ge, Yuecheng Li, Di Wu, Ao Xu, Adam M Jones, Amanda Sofie Rios, Iordanis Fostiropoulos, Shixian Wen, Po-Hsuan Huang, Zachary William Murdock, Gozde Sahin, Shuo Ni, Kiran Lekkala, Sumedh Anand Sontakke, Laurent Itti TMLR, 2023 project page / paper We propose a new Shared Knowledge Lifelong Learning (SKILL) challenge, which deploys a decentralized population of LL agents that each sequentially learn different tasks, with all agents operating independently and in parallel. After learning their respective tasks, agents share and consolidate their knowledge over a decentralized communication network, so that, in the end, all agents can master all tasks. |

|

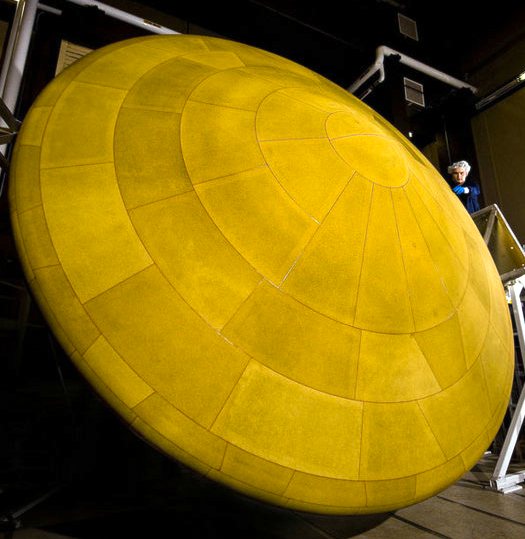

Iordanis Fostiropoulos, Barry Boehm CVPR, 2022 project page / paper We propose a new Vector Quantization method for latent features that improves reconstruction performance on images. By decomposing the features in the latent space and learning an auto-encoder end-to-end we show that it implicitly decouples the features and leads to improved reconstruction performance. |

|

Junyan Cheng*, Iordanis Fostiropoulos*, Barry Boehm, Mohammad Soleymani EMNLP, 2021 * Equal Constribution project page / paper The quadratic complexity of the self-attention mechanism in Transformers limits their deployment in low-resource devices and makes their inference and training computationally expensive. We propose multimodal Sparse Phased Transformer (SPT) to alleviate the problem of self-attention complexity and memory footprint. |

|

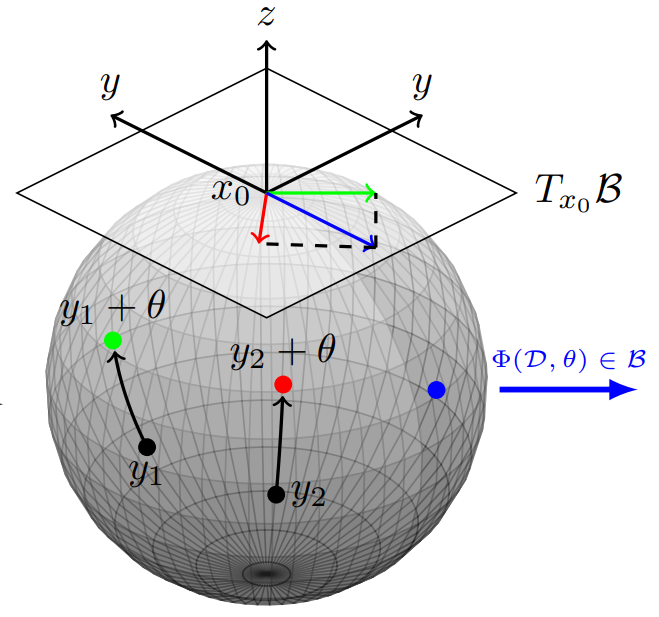

Panagiotis Kyriakis, Iordanis Fostiropoulos, Paul Bogdan ICLR, 2021 project page / paper We propose a method to learn representations of persistence diagrams on hyperbolic spaces, more specifically on the Poincare ball. By representing features of infinite persistence infinitesimally close to the boundary of the ball, their distance to non-essential features approaches infinity, thereby their relative importance is preserved. |

|

|

|

|

Iordanis Fostiropoulos April 4th, 2023 A summary of Transformer models given at 221 students at University of Southern California as part of their Artificial Intelligence curriculum (CSCI561) |

|

Served as a reviewer for CVPR, ICCV, NeurIPS, AAAI, AutoML, IJCAI, AISTATS |